Ok, so I finally got a chance to finish this three-part series. It’s been a long one, but better late than never. Since it’s been a while, I figured a quick recap is in order.

To show how to approach an unguided data project, I decided to use some Netflix data to demonstrate the process across three projects. The first was a fairly basic exploratory analysis of the dataset that used some foundational skills in data wrangling and visualization. Moving on from that was implementing the Shiny package to upgrade some of the visualization by introducing some dynamic elements. All-in-all, the case study shown here isn’t too difficult; it’s just a lot of busy work (aka. real-life data work).

Since I had already looked at the data, the next logical step would be to use this data for some real-life purpose. So, what better way to do so than by creating the classical recommender system, a staple application in machine learning.

You’re probably familiar with the recommender system, principally because of the rise of YouTube, Amazon and other streaming consumer-based web services. However, if you’re ever in need of a quick definition for this (say in an interview for a data science position), you can explain it as a filter-based application of an algorithm that tries to predict preferences (i.e., movies, songs, video clips, products) in accordance with some known factors.

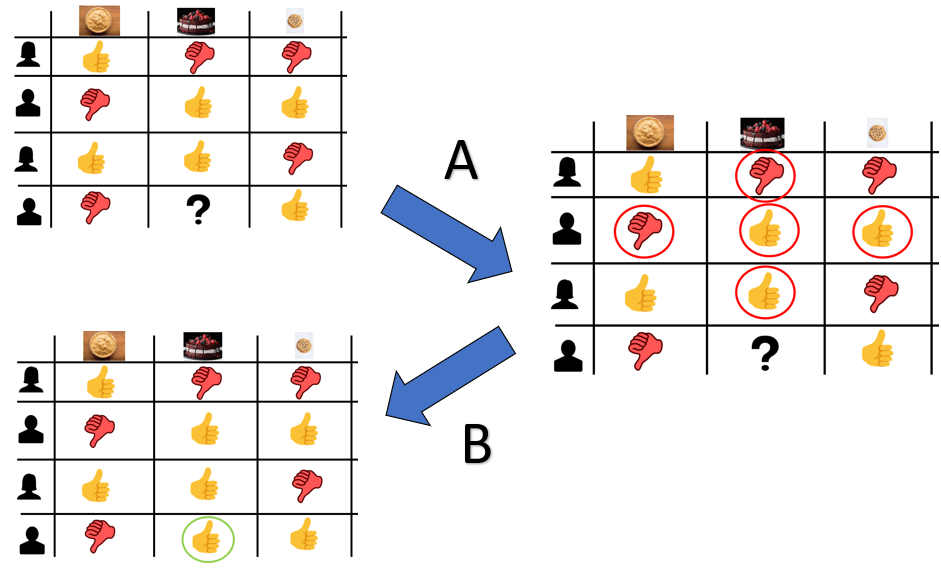

Generally speaking, there are a few major types of recommender systems in use. One type is the collaborative-based model. As the name suggests, it uses the input of multiple past users to produce recommendations for future users. Usually, it’ll be a historic rating profile.

Figure 1. In this case, the rating history of past members for cakes/pies/cookies as dessert will help predict whether or not to recommend cake as a dessert in relation to said person’s opinions about pies and cookies.

While this method has the benefit of not needing domain knowledge to determine recommendations, it does have a few drawbacks:

While this would have been a perfectly valid option, this Netflix data doesn’t have any sort of data pertaining to user behavior, and it only consists of info pertaining to the content. Thus, we will have to use an alternative model known as the content-based recommender system. As the name suggests, it’s a method that relies on the description profile of the item to generate recommendations.

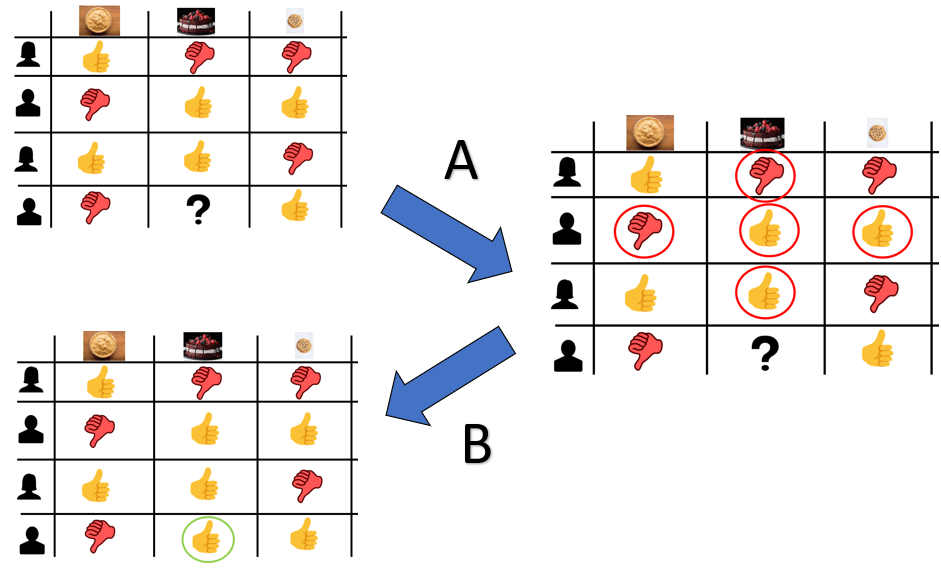

This is something that happens when you go to a store and ask a salesperson to recommend a product (say, a car) based on some of your important purchasing qualities. However, you really just automate this process by filling out some kind of user profile.

Figure 2. It's sort of like going to a car dealership to grab some options, but without the useless undercoat upsell.

While this method does have its flaws with respect to scalability and its limitation in recommending anything outside of your original preference setting, it does solve the “cold start” or “sparsity” issue because it doesn’t rely on existing connections to make predictions. It is just based on the weight of each feature, which denotes the importance to the user.

So that’s enough about the theory of the recommender system. Let’s move onto how to make it happen using our Netflix dataset.

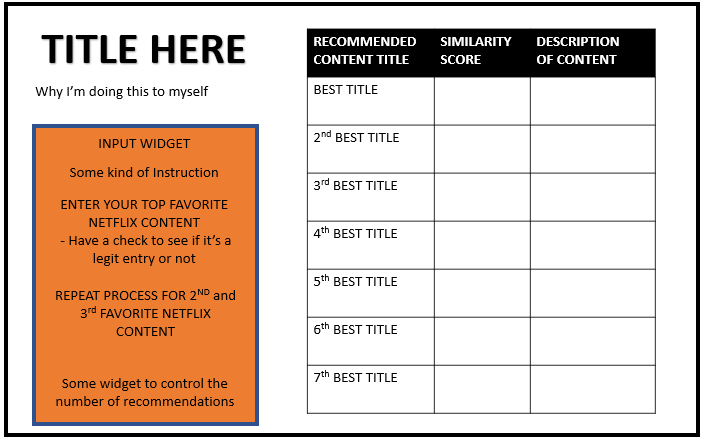

Like all things, we’ll start with a quick visual of how I would like this project to look. In my mind, it should look like this:

Figure 3. So clearly, we'll need to use the Shiny package here.

In terms of the backend side of things, you can choose many algorithms to facilitate recommendations. This is the first of many factors that determine the quality of your recommender system. Considering the system’s simplicity and its ability to produce fairly competitive results with more complex algorithms, we’ll be using two approaches: (1) clustering and (2) K-Nearest Neighbor (KNN) modelling to generate our recommendations.

Now that we’ve determined the recommender system type and method, the next important factor is how to assess the data to generate those recommendations. Typically, options like Euclidean or Manhattan’s distance work for most cases (as in KNN modelling). However, some consideration is important here because certain distance metrics work better for particular data types. Considering that this dataset contains predominantly qualitative data with a great deal of dimensionality, we’ll use an alternative to the typical distance metric in the form of (1) Jaccard’s distance for the KNN approach and (2) Gower’s distance, measures dissimilarity between individuals for the clustering. (The details of this metric are beyond the scope of this post, but you can read them here, here, and here.) However, the TLDR rationale for choosing this is that it allows us to measure distance between non-numerical data.

Now unlike the KNN approach, the clustering approach will use three different content inputs instead of just one because we would like to specifically understand one’s interest profile in streaming content to generate recommendations. However, these inputs will differ based on the individual’s ranking of the three types of content, which means we will introduce a weighting factor in the algorithm. This weighting factor should be both large enough to demonstrate difference between the choices as well as small enough to not drastically affect the recommendations provided.

Now that we know the general process, the last thing we’ll need to determine is which of the available variables we should use in our clustering approach. Normally, you would do some in-depth market research with a subsample of the target population to identify trends or patterns between content and watchability (likely measured in terms of a rating system or viewing frequency). Since it’s clear that we don’t have that knowledge at hand, nor the resources to even try to find that answer, the next best thing would be to use your best judgment in selecting variables that would affect watchability. After some initial exploration and some reasonable assumptions, we’ll use the following variables from the dataset:

Because the cast can be large, we’ll consider the headlining cast member (i.e., the lead actor or actress) as the most important influence on viewership. So, we’ll only include those actors or actresses.

Now, let’s work through the plan step-by-step.

As with any data project, we begin with some pre-processing of the Netflix dataset. With both approaches to generating recommendations, the initial process will be the same. We need to accomplish the following:

netflix_combine = cbind(as.data.frame(netflix_lead$cast_lead), netflix_modern_english)

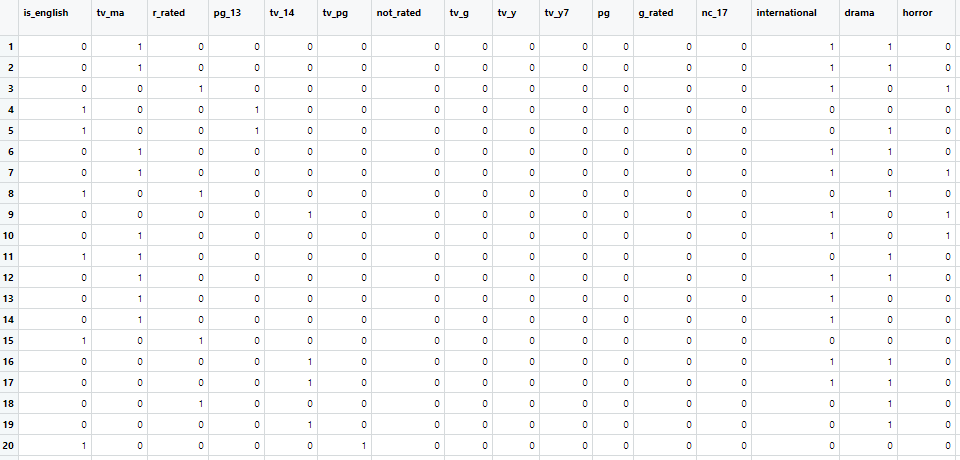

netflix_combine = netflix_combine 4. Distribute the listed genres and content ratings across each column.

netflix_combine = netflix_combine mutate(

international = ifelse(str_detect(listed_in, "(.+/s)?International TV Shows(.+/s)?|(.+/s)?International Movies(.+/s)?|(.+/s)?British TV Shows(.+/s)?|(.+/s)?Spanish\\-Language TV Shows(.+/s)?|(.+/s)?Korean TV Shows(.+/s)?") == T, 1, 0),

drama = ifelse(str_detect(listed_in, "(.+/s)?Dramas(.+/s)?|(.+/s)?TV Dramas(.+/s)?") == T, 1, 0),

horror = ifelse(str_detect(listed_in, "(.+/s)?Horror Movies(.+/s)?|(.+/s)?TV Horror(.+/s)?") == T, 1, 0),

action_adventure = ifelse(str_detect(listed_in, "(.+/s)?Action \\& Adventure(.+/s)?|(.+/s)?TV Action \\& Adventure(.+/s)?") == T, 1, 0),

crime = ifelse(str_detect(listed_in, "(.+/s)?Crime TV Shows(.+/s)?") == T, 1, 0),

docu = ifelse(str_detect(listed_in, "(.+/s)?Documentaries(.+/s)?|(.+/s)?Docuseries(.+/s)?|(.+/s)?Science \\& Nature TV(.+/s)?") == T, 1, 0),

comedy = ifelse(str_detect(listed_in, "(.+/s)?Comedies(.+/s)?|(.+/s)?TV Comedies(.+/s)?|(.+/s)?Stand\\-up Comedy(.+/s)?|(.+/s)?Stand\\-Up Comedy \\& Talk Shows(.+/s)?") == T, 1, 0),

anime = ifelse(str_detect(listed_in, "(.+/s)?Anime Features(.+/s)?|(.+/s)?Anime Series(.+/s)?") == T, 1, 0),

independent = ifelse(str_detect(listed_in, "(.+/s)?Independent Movies(.+/s)?") == T, 1, 0),

sports = ifelse(str_detect(listed_in, "(.+/s)?Sport Movies(.+/s)?") == T, 1, 0),

reality = ifelse(str_detect(listed_in, "(.+/s)?Reality TV(.+/s)?") == T, 1, 0),

sci_fi = ifelse(str_detect(listed_in, "(.+/s)?TV Sci\\-Fi \\& Fantasy(.+/s)?|(.+/s)?Sci\\-Fi \\& Fantasy(.+/s)?") == T, 1, 0),

family = ifelse(str_detect(listed_in, "(.+/s)?Kid\\'s TV(.+/s)?|(.+/s)?Children \\& Family Movies(.+/s)?|(.+/s)?Teen TV Shows(.+/s)?|(.+/s)?Faith \\& Spirituality(.+/s)?") == T, 1, 0),

classic = ifelse(str_detect(listed_in, "(.+/s)?Classic Movies(.+/s)?|(.+/s)?Cult Movies(.+/s)?|(.+/s)?Classic \\& Cult TV(.+/s)?") == T, 1, 0),

thriller_mystery = ifelse(str_detect(listed_in, "(.+/s)?Thrillers(.+/s)?|(.+/s)?TV Thrillers(.+/s)?|(.+/s)?TV Mysteries(.+/s)?") == T, 1, 0),

musical = ifelse(str_detect(listed_in, "(.+/s)?Music \\& Musicals(.+/s)?") == T, 1, 0),

romantic = ifelse(str_detect(listed_in, "(.+/s)?Romantic TV Shows(.+/s)?|(.+/s)?Romantic Movies(.+/s)?|(.+/s)?LGBTQ Movies(.+/s)?") == T, 1, 0)

) select(

-listed_in, -country

) netflix_combine = netflix_combine mutate(

tv_ma = ifelse(rating == "TV-MA", 1, 0),

r_rated = ifelse(rating == "R", 1, 0),

pg_13 = ifelse(rating == "PG-13", 1, 0),

tv_14 = ifelse(rating == "TV-14", 1, 0),

tv_pg = ifelse(rating == "TV-PG", 1, 0),

not_rated = ifelse(rating == "NR", 1,ifelse(rating == "UR",1, 0)),

tv_g = ifelse(rating == "TV-G", 1, 0),

tv_y = ifelse(rating == "TV-Y", 1, 0),

tv_y7 = ifelse(rating == "TV-Y7", 1, ifelse(rating == "TV-Y7-FV",1, 0)),

pg = ifelse(rating == "PG", 1, 0),

g_rated = ifelse(rating == "G", 1, 0),

nc_17 = ifelse(rating == "NC-17", 1, 0)

) select(

-rating

) # Transform the newly formed variables into the appropriate data type

netflix_combine = netflix_combine mutate(

is_english = ifelse(is_english == "no", 0, 1),

cast_lead = ifelse(is.na(cast_lead), "Unknown/No Lead", cast_lead)

) mutate(

cast_lead = as.factor(cast_lead)

)

```

With the dataset now prepped, the next step would be to create dummy variables with the categorical variables in the dataset, which includes each listed director and cast member using the dummy_cols() function from the fastDummies package. Note: with 7,787 entries and multiple dimensions with a given variable, this will take a while to run or fail due to the number of options. This explains the difficulty in scaling a content-based recommender system.

# “Dummify” the categorical variable

netflix_combine_for_knn = fastDummies::dummy_cols(netflix_combine, select_columns = c("cast_lead"), remove_selected_columns = TRUE)

```

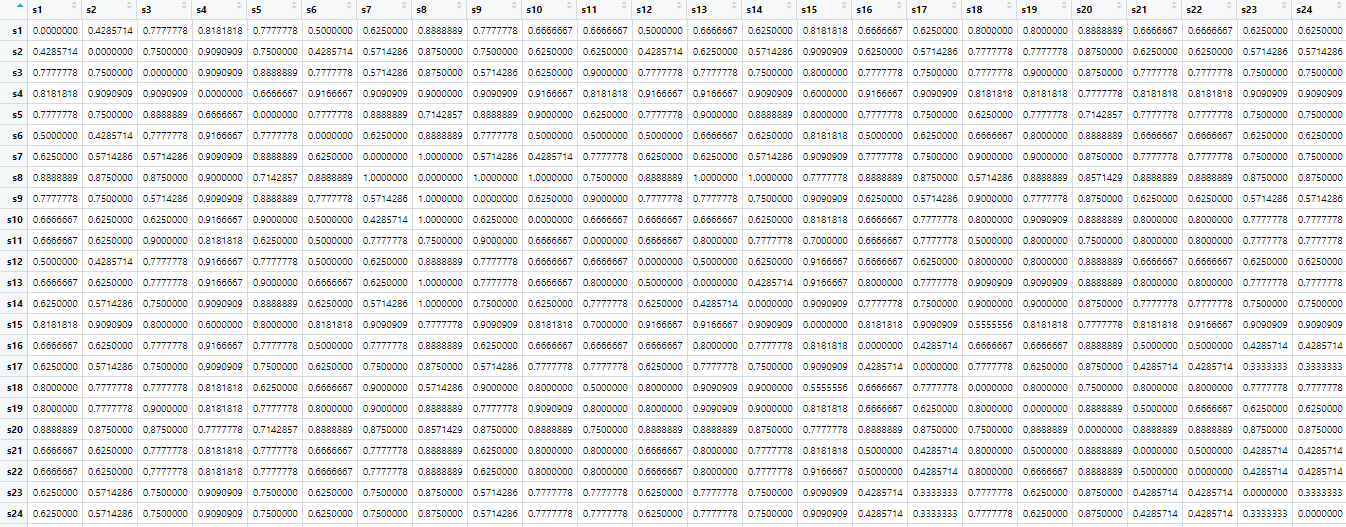

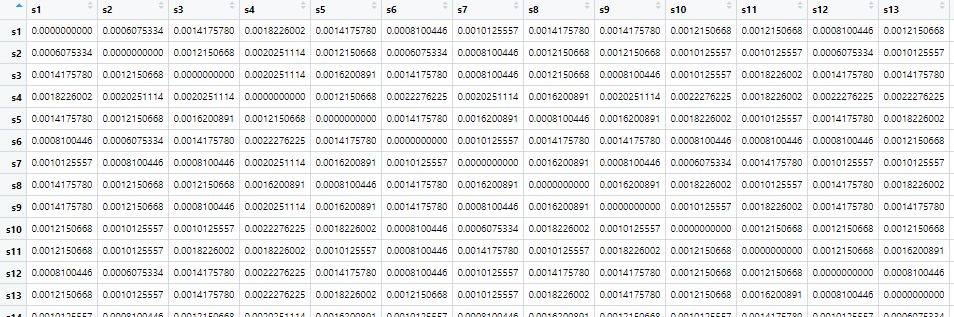

The next step would be to create a matrix containing all of the relevant entries that would be used in our algorithms (i.e., everything minus the title of the content, description of content, whether the content is a movie/TV show). This is where the processes of the two machine learning algorithms begin to differ. When using a KNN model, we need to calculate the Jaccard’s distance, which is possible with the dist() function with the input as method = “binary”.

netflix_for_matrix = netflix_combine_for_knn rownames(netflix_for_matrix) = netflix_for_matrix[, 1] # Using show id for both column and row names in the matrix

netflix_for_matrix = netflix_for_matrix netflix_matrix = as.matrix(dist(netflix_for_matrix, method = "binary")) # FOR KNN APPROACH ```

When using the clustering approach with Gower’s distance as the metric, we need to use the daisy() function from the cluster pack

When using the clustering approach with Gower’s distance as the metric, we need to use the daisy() function from the cluster pack

dissimilarity_gower_1 = as.matrix(daisy(netflix_gower_combine_1, metric = "gower"))

row.names(dissimilarity_gower_1) = netflix_gower_combine$show_id

colnames(dissimilarity_gower_1) = netflix_gower_combine$show_id ```

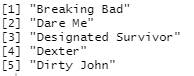

The last step is to create a function to generate recommendations. Using the KNN approach, this will involve (1) creating a vector to store the findings, (2) running through each entry in the dataset to skip over any exact title matches of the input, and (3) gathering the top matching content based on the K-number selected. In this case, the shorter the distance metric corresponds to a greater presumed match to the input.

The last step is to create a function to generate recommendations. Using the KNN approach, this will involve (1) creating a vector to store the findings, (2) running through each entry in the dataset to skip over any exact title matches of the input, and (3) gathering the top matching content based on the K-number selected. In this case, the shorter the distance metric corresponds to a greater presumed match to the input.

new_recommendation = function(title, data, matrix, k, reference_data) < # translate the title to show_id show_id = reference_data$show_id[reference_data$title == title] # create a holder vector to store findings

nrow(data))

metric = rep(0, nrow(data))

content_title = reference_data$title

description = reference_data$description

country = reference_data$country

genres = reference_data$listed_in

type = reference_data$type for(i in 1:nrow(data)) if(rownames(data)[i] == show_id) next # used to skip any exact titles used from the input

>

id[i] = colnames(matrix)[i]

metric[i] = matrix[show_id, i]

> choices = cbind(as.data.frame(id), as.data.frame(content_title), as.data.frame(description), as.data.frame(metric), as.data.frame(type), as.data.frame(country), as.data.frame(genres))

choices = choices choices = as.data.frame(choices)

return(choices[0:(k+1),])

> View(new_recommendation(“The Other Guys”, netflix_for_matrix, netflix_matrix, 15, netflix))

```

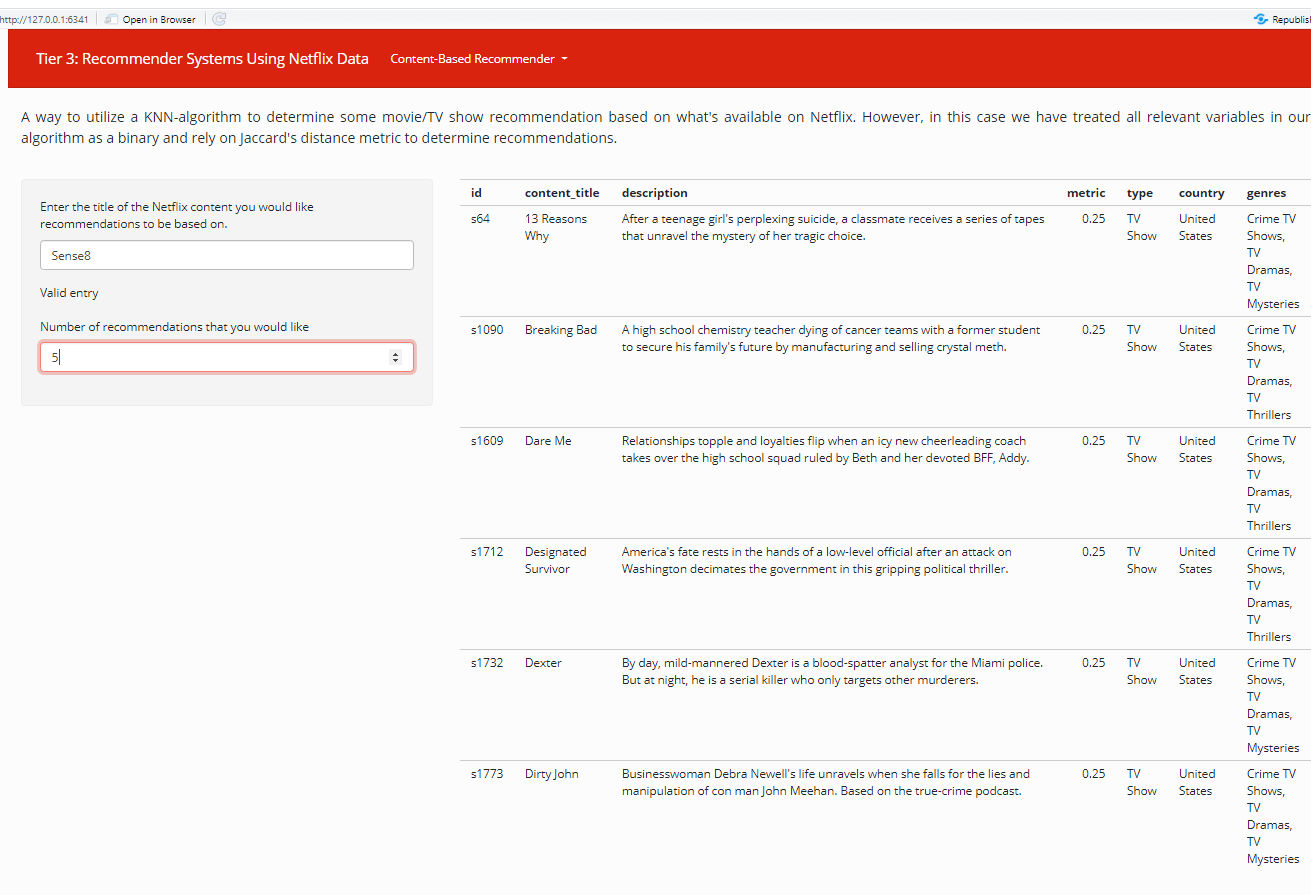

Figure 5. The recommendations could be better here. Boom! We’ve got a working Netflix content-based recommender system using two different algorithms here. Pretty sweet right? Obviously, we can automate this by including each of the above steps into a singular function. (You can check out that code

Figure 5. The recommendations could be better here. Boom! We’ve got a working Netflix content-based recommender system using two different algorithms here. Pretty sweet right? Obviously, we can automate this by including each of the above steps into a singular function. (You can check out that code  Figure 7. Using Jaccard's distance to determine Netflix recommendations There we have it. We’ve got a fully functioning Shiny web app that provides a number of TV series/movie recommendations based on some of your favorites. Pretty cool, huh? If you want to see how this is coded, you can There we have it. We finally reached the end of this series, putting into action some of the advice I mentioned earlier. Thanks for following along, and I hope that you found some knowledge and/or inspiration to help you with your own unguided projects. If you’re interested in checking out some of my other projects, you can head over to my GitHub. Alternatively, if you’re got some idea on a collaborative project or just want to connect, hit me up on my LinkedIn. Happy projecting all!

Figure 7. Using Jaccard's distance to determine Netflix recommendations There we have it. We’ve got a fully functioning Shiny web app that provides a number of TV series/movie recommendations based on some of your favorites. Pretty cool, huh? If you want to see how this is coded, you can There we have it. We finally reached the end of this series, putting into action some of the advice I mentioned earlier. Thanks for following along, and I hope that you found some knowledge and/or inspiration to help you with your own unguided projects. If you’re interested in checking out some of my other projects, you can head over to my GitHub. Alternatively, if you’re got some idea on a collaborative project or just want to connect, hit me up on my LinkedIn. Happy projecting all!